How to install ROS drivers for Azure Kinect on Ubuntu 16.04

Update: see this Google Doc for the most up-to-date instructions on setting up Azure Kinect ROS drivers on Ubuntu 16.04. Many thanks to Sarthak Shetty, Kevin Zhang, and Jamie Chen for updating the instructions!

Following up from my previous post on installing the Azure Kinect SDK on Ubuntu 16.04, this post provides instructions for setting up ROS drivers for the Azure Kinect. These instructions apply for ROS kinetic and Ubuntu 16.04.

Installation steps

- Install the Azure Kinect SDK executables on your path so they can be found by ROS.

$ cd path/to/Azure-Kinect-Sensor-SDK/build $ sudo ninja install - Clone the official ROS driver into a catkin workspace.1

$ cd catkin_ws/src $ git clone https://github.com/microsoft/Azure_Kinect_ROS_Driver.git -

Make minor edits to the codebase. If you were to build the workspace now, you would get errors relating to

std::atomicsyntax.To fix this, open

<repo>/include/azure_kinect_ros_driver/k4a_ros_device.hand convert all instances ofstd::atomic_TYPEtype declarations tostd::atomic<TYPE>. Below is a diff of the edits I made.@@ -117,11 +117,11 @@ class K4AROSDevice volatile bool running_; // Last capture timestamp for synchronizing playback capture and imu thread - std::atomic_int64_t last_capture_time_usec_; + std::atomic<int64_t> last_capture_time_usec_; // Last imu timestamp for synchronizing playback capture and imu thread - std::atomic_uint64_t last_imu_time_usec_; - std::atomic_bool imu_stream_end_of_file_; + std::atomic<uint64_t> last_imu_time_usec_; + std::atomic<bool> imu_stream_end_of_file_; // Threads std::thread frame_publisher_thread_; -

Build the catkin workspace with either

catkin_makeorcatkin build. - Copy the libdepthengine and libstdc++ binaries that you placed in the

Azure_Kinect_SDK/build/binfolder from my previous post in your catkin workspace.$ cp path/to/Azure_Kinect_SDK/build/bin/libdepthengine.so.1.0 path/to/catkin_ws/devel/lib/ $ cp path/to/Azure_Kinect_SDK/build/bin/libstdc++.so.6 path/to/catkin_ws/devel/lib/You will have to do this whenever you do a clean build of your workspace.

- Copy udev rules from the ROS driver repo to your machine.

$ cp /path/to/Azure_Kinect_ROS_Driver/scripts/99-k4a.rules /etc/udev/rules.d/Unplug and replug your sensor into the machine after copying the file over.

- Source your built workspace and launch the driver.

$ source path/to/catkin_ws/devel/setup.bash $ roslaunch azure_kinect_ros_driver driver.launchNote that there are parameters you can adjust in the driver launch file, e.g. FPS, resolution, etc.

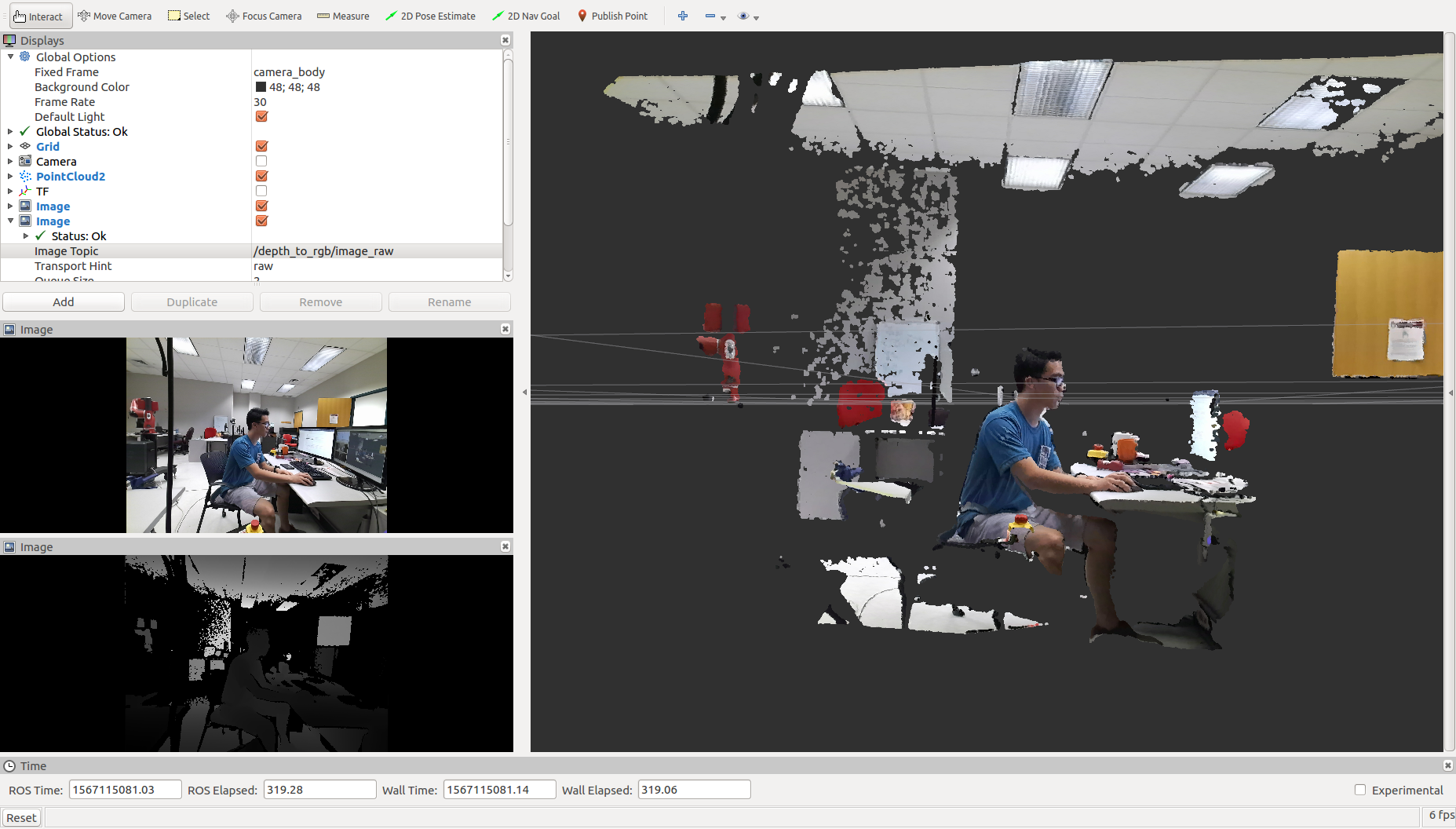

- Run Rviz and you should be able to open Image and PointCloud2 widgets that read topics from the sensor!

Footnotes

-

I used commit

be9a528ddac3f9a494045b7acd76b7b32bd17105, but a later commit may work. ↩